Resume

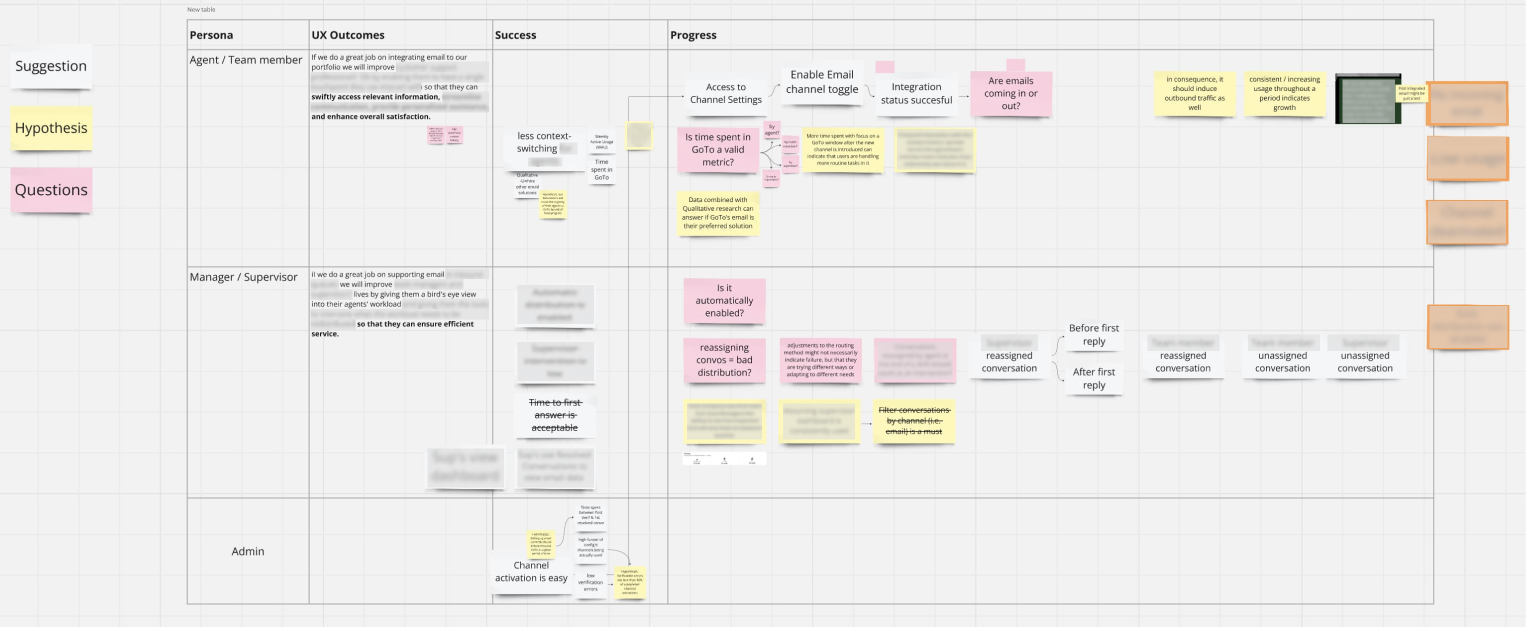

To increase the UX's strategic role in our trio structure at GoTo, we used UX outcomes as a catalyst to define our success and the metrics we would track towards it.

What

Define metrics to assess the performance of our features through a user-centric perspective.

Why

Justifying the ROI of UX is easier when our metrics reflect experience aspects and how they indicate success other stakeholders can see.

Problem

How might we choose metrics that help us show the impact of the user experience we are building? Having those defined, how might we track our progress towards our shared understanding of success?

Business perspective

Awareness: with clearer data, the team gets to be aware of how working on improving usability and customer satisfaction can support concrete business goals like increasing conversions, customer loyalty and reduced costs.

Effectiveness: well-defined UX outcomes help mitigate risks by ensuring that features are addressing real user needs while staying aligned with the business strategy.

User perspective

User-centricity: collaborating on UX outcomes as a trio helps prioritize our users’ needs by observing their behaviors and pain points and ensuring we have tools to track how these are impacted by our feature development over time.

Commitment: crafting our definition of success together and setting up a board with the metrics that should take us there drives the trio to share the responsibility, ensuring we are all committed to keep track of our shared goal.

Process

We used Jared Spool’s UX Outcomes methodology to structure our collaboration. We started by gathering the team working on an email integration to our software, which we were just about to release. While working with UX outcomes is perfect to guide us from the very start of feature development, it is also a strong resource at its final stages: defining them helps to organize artifacts that were produced during our exploration, definition and prototyping phases and forces the team to look at the priorities we chose to do our designs.

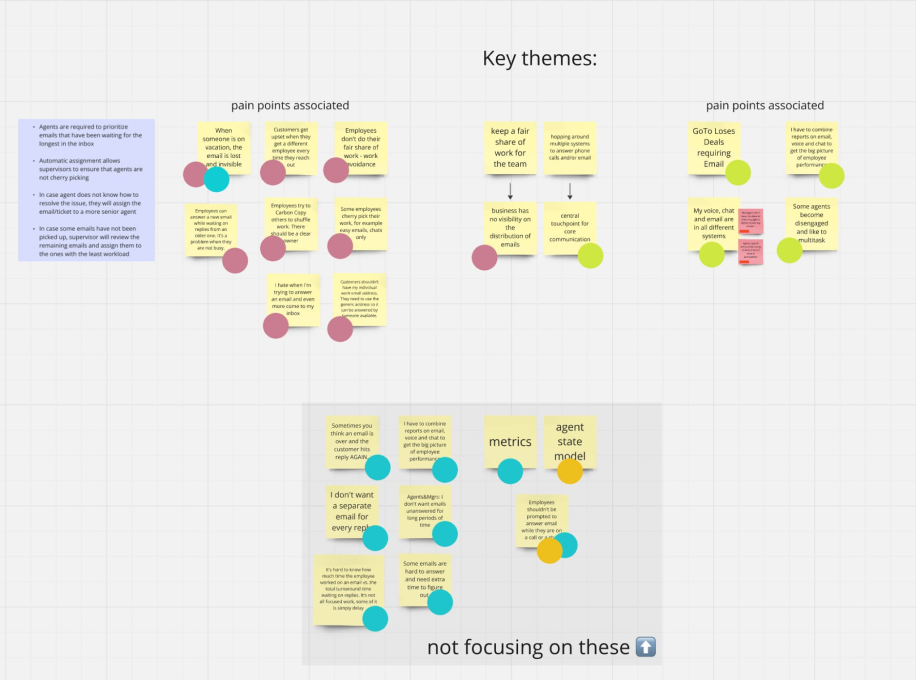

With two UX designers, a Product Manager and an Engineering Lead, we got back to analyze the current experience our users had to go through. We had listed their key frustrations while interacting with email throughout their journey and validated those through research. Fueling our analysis with user insight is crucial if we aim at providing real value to users.

In order to have a MVP defined, the team had prioritized those key frustrations and selected the ones we would provide solutions to. We regrouped those and clustered them according to common themes. That made it easier to identify at least two different UX outcomes were needed.

The most compelling solutions for these frustrations were, by then, already included in our prototypes. With clear understanding of the journey we were impacting, we were ready to work on our UX outcomes drafts. Our framework was:

-

If we do a great job on...

(feature, product or service we are presenting) -

... we will improve...

(person whose life is improved)

...’s life by...

(improvement) -

... so that...

(other consequential benefits to their lives)

After multiple rounds of trying different wording, we got to a couple of statements that seemed to rightfully describe the value we were adding to our product if done correctly. This was the moment to move on to select metrics that would show we were approaching our goal.

Spool’s methodology illustrates success metrics as the “finish line on a race” because they should mean we’ve achieved the goal we were aiming for. When a UX outcome is defined, there must be a clear indicative of what our success can look like and it should be measurable. Also, because it most likely means a multi-faceted change in users’ experiences, we must have indicatives along the way of how are we progressing, making the definition of progress metrics essential.

Our trio gathered once more to define what could tell us we did a good job with our email integration. We added our hypothesis to our board, anticipating what we expected would change once our feature was released. Our suggestions for possible ways of measuring it meant to highlight more specific actions that could be observed, fueling discussion around what was the best way to do it, if they actually reflected what we were looking for and if there were any technical constraints we needed to be aware of when setting up our tracking. In the end, we were left with a few questions and action points each, making it a continuously developing source of truth as we progressed towards release and on.

Our main deliveries, after the process, were the very own board in which we collaborated (and would be adding/updating information as we learned more) and a metric dashboard in our event analytics tool displaying the progress metrics we had defined with our hypothesis pinned, building close ties to the data we observed and what it meant for our strategy. With the whole team committed to following up, we had a solid post-release strategy that resulted from great trio collaboration.